Packet Loss

What is Packet Loss?

Derived from the Director's Blog

The Internet uses a system of packets to send information. This means that whatever you are doing, whether accessing Facebook, making a Skype call, playing an on-line game, downloading a file or reading an email, the information is broken down in to packets. These are not always the same size, and are typically up to around 1500 bytes (or characters) of data at a time.

Each of these packets carries some addressing information, and some data. The fact that packets are used means it is possible to have lots of things happening at once, with bits of one thing in one packet followed by bits of something else in another packet and so on, mixing up multiple things on one Internet connection. This is how it is possible for lots of people to use an Internet connection at once. The addressing data in the packet makes sure the right things go to the right place and are put back together at the far end.

This is all very different to old fashioned phone calls which work on circuits. They work by creating a means to send data (e.g. voice) continuously at a specific speed between two points, reserving the capacity for that link for the duration of the call. You either manage to establish the call (the circuit), or not, at the start. Once you have it, you have the circuit in place until you finish. It is a very different way of working to packets.

One of the problems you get is where a link of some sort gets full.

With a circuit based system like phone calls a full link (i.e. one already carrying as many calls as it can) will mean you get an equipment engaged tone. The call fails to start.

However, with a packet based system, when a link gets full you start with a queue of packets waiting to go down the link (adding delay) and ultimately you drop packets. That means the packets are thrown away. This can, and does, happen at any bottleneck anywhere in the Internet. The most likely being where the Internet connects to your Internet connection and create a bottleneck.

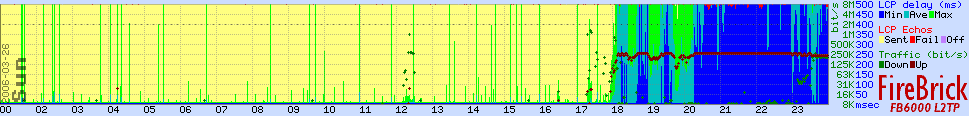

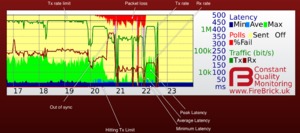

The example above show a line with occasional short uploads causing spikes in peak latency, and then a sustained upload starting at around 6pm and causing high latency (queue in the router). At 8pm there was more upload filling the link causing higher latency still and some loss (normal when the link is full). This is normal.

How Does AAISP Monitor Packet Loss

Through our CQM Graphs.

AAISP monitor all lines every second and measure loss, latency, usage. More details on the CQM Graphs page.

We can also set up a separate ICMP ping graph to the WAN address, and then therefor compare the results for more information.

So packet loss is normal. It is what happens when a link is full.

The result of this packet loss depends on the protocol. The overall effect on any sort of data transfer, such as downloading a file, or sending an email, is that the transfer happens at a slower speed. The end points send packets of data at a slower speed so that they don't get dropped packets. Importantly, with a lot of protocols, the missed packets are re-sent which means the data does not have gaps in it.

Some protocols do not allow resending or slowing down, these include things like VoIP calls, like Skype, where you can't slow down a phone call. What happens in such cases is you get gaps in the call - break-up, pops, etc.

Some systems are clever and decide which packets to drop when a link is full, giving protocols like VoIP a chance to get through and dropping packets for protocols that can back-off if needed. We do this in A&A, for example.

However, there is another scenario where you can get packet loss, and this is where there is a fault. In the case of a fault you will find some packets are dropped at random. What usually happens is some of the data in the packet is corrupted (changed) by random noise or errors from the fault, and this means that the packet no longer checks out when it gets to the other end. Packets have built in checks to confirm nothing was changed, and if that check fails the packet is dropped.

The effect of fault based packet loss depends on the protocol.

For protocols like VoIP, the dropped packet simply means break up in the call. Even low level of packet loss can mean annoying pops and gaps in the call.

For protocols that can back off and slow down, well, that is what they do. They cannot tell that the packet loss is the result of a fault and not of a full link, so they slow down. But even when the slow down, they still get packet loss as it is random. So they slow down even more. They don't understand the problem, and just assume that a link must be getting full no matter how slow they go.

Imagine if driving a car with no speedo but you get a light saying "driving too fast". That is fine, when you see the light, you slow down, and you stop seeing the light. That means you drive at the right speed. But if the light is faulty and keeps saying "driving too fast" at random, you will slow down, and still see the light, so slow down more, and before you know it you are crawling along at walking speed.

This means that even low levels of random packet loss can massively slow down a data transfers.

Packet loss when a link is otherwise idle is a fault.

The problem is that when you measure packet loss you do not always know if the link is full or not. Your tests of packet loss, usually a protocol called ping, could be losing packets because a link is full sending an email, or it could be losing packets because of a fault.

The key is to measure packet loss when a link is otherwise empty of traffic, so that the only reason to drop packets is because of a fault.

The other problem with measuring loss is how you measure it. The normal measure is percentage loss. If you send 100 packets, how many arrive and how many are lost. This is fine, but random corruption causing loss will have a much higher chance of causing a packet to be lost if the packet is bigger. So you have to look at packet loss and packet size. From this you can work out a rate of corruptions on a link and predict the loss for other packet sizes.

The best measure of loss as a simple percentage is the loss when sending full size packets (1500 bytes) which is what the data transfer protocols (like TCP) use. Even a 1% or 2% of loss of such packets can cause TCP to slow down massively. It does not work like taking away a couple of percent of speed - the data transfers keep slowing down as they keep thinking the line must be full.

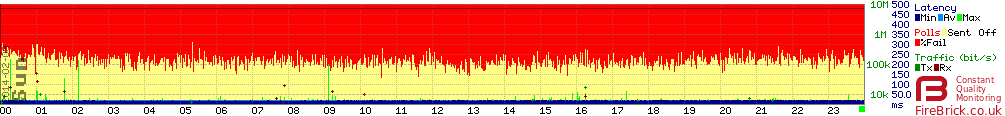

Packet loss on an idle line is always bad news...

...even if only 1% (one red dot at the top is 1%)

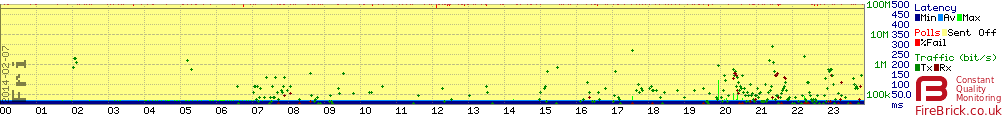

2% loss is not like 98% working speed

A simpler, and less intrusive measure of loss, is a simple short LCP echo. LCP echoes are a normal part of most Internet links, and A&A do them every second and record the loss for every line. This is only a few bytes, and so packet loss that is a fraction of a percentage could mean several percent at full packet sizes. This is why it is so important to take even very low levels of LCP echo loss seriously.